Tokenization & Lexical Analysis

Stephan Kreutzer - 203 Bekeken

0

0

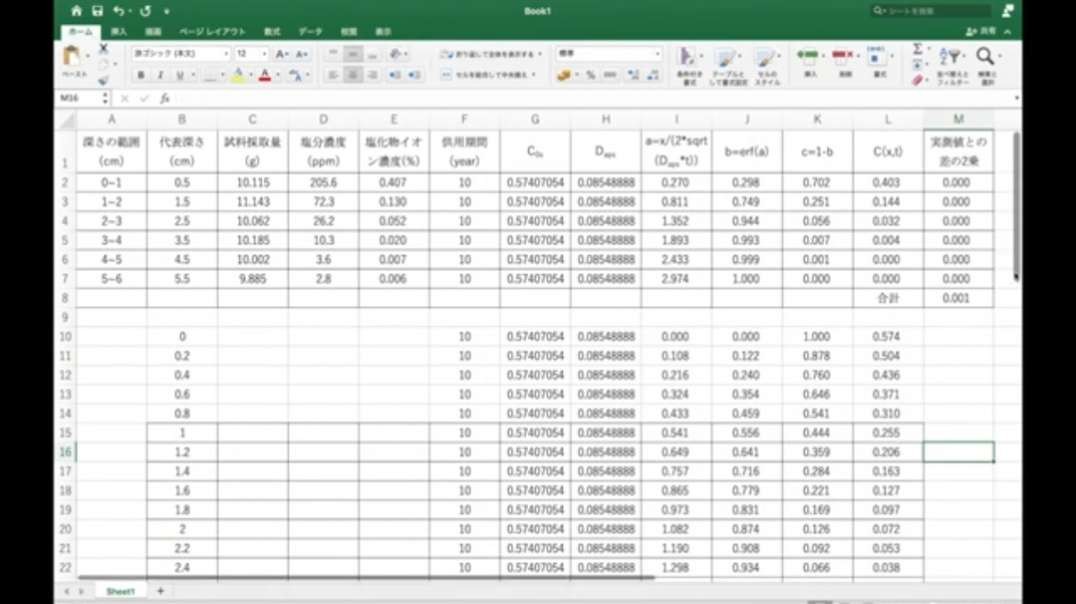

Loading (deserializing) structured input data into computer memory as an implicit chain of tokens in order to prepare subsequent processing, syntactical/semantical analysis, conversion, parsing, translation or execution.

Laat meer zien

0 Comments

sort Sorteer op